In my last post, I made the point that p-values should not necessarily be considered sufficient evidence (or evidence at all) in drawing conclusions about associations we are interested in exploring. When it comes to contingency tables that represent the outcomes for two categorical variables, it isn’t so obvious what measure of association should augment (or replace) the statistic.

I described a model-based measure of effect to quantify the strength of an association in the particular case where one of the categorical variables is ordinal. This can arise, for example, when we want to compare Likert-type responses across multiple groups. The measure of effect I focused on - the cumulative proportional odds - is quite useful, but is potentially limited for two reasons. First, the proportional odds assumption may not be reasonable, potentially leading to biased estimates. Second, both factors may be nominal (i.e. not ordinal), it which case cumulative odds model is inappropriate.

An alternative, non-parametric measure of association that can be broadly applied to any contingency table is Cramér’s V, which is calculated as

where is from the Pearson’s chi-squared test, is the total number of responses across all groups, is the number of rows in the contingency table, and is the number of columns. ranges from to , with indicating no association, and indicating the strongest possible association. (In the addendum, I provide a little detail as to why cannot exceed .)

Simulating independence

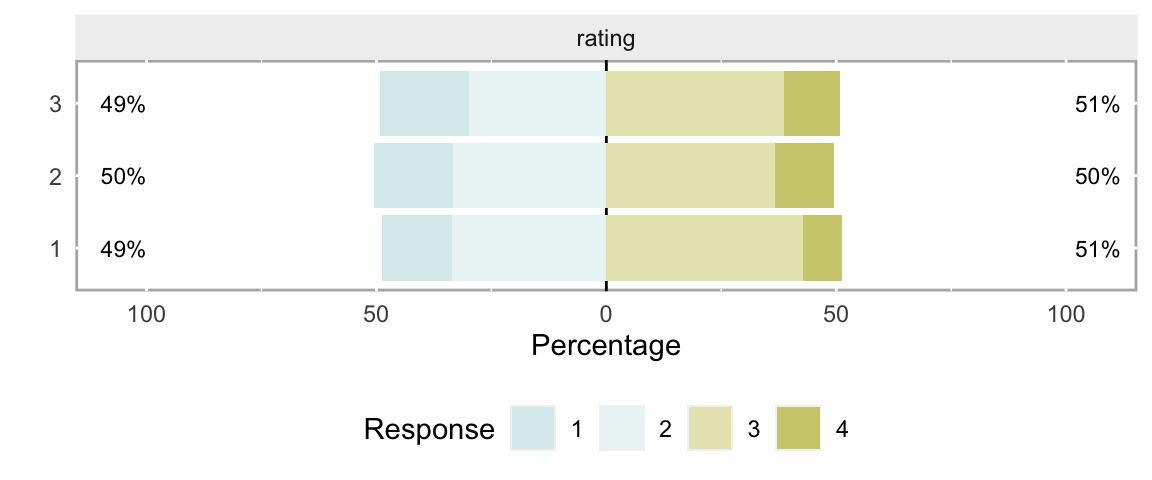

In this first example, the distribution of ratings is independent of the group membership. In the data generating process, the probability distribution for rating has no reference to grp, so we would expect similar distributions of the response across the groups:

library(simstudy)

def <- defData(varname = "grp",

formula = "0.3; 0.5; 0.2", dist = "categorical")

def <- defData(def, varname = "rating",

formula = "0.2;0.3;0.4;0.1", dist = "categorical")

set.seed(99)

dind <- genData(500, def)And in fact, the distributions across the 4 rating options do appear pretty similar for each of the 3 groups:

In order to estimate from this sample, we use the formula (I explored the chi-squared test with simulations in a two-part post here and here):

observed <- dind[, table(grp, rating)]

obs.dim <- dim(observed)

getmargins <- addmargins(observed, margin = seq_along(obs.dim),

FUN = sum, quiet = TRUE)

rowsums <- getmargins[1:obs.dim[1], "sum"]

colsums <- getmargins["sum", 1:obs.dim[2]]

expected <- rowsums %*% t(colsums) / sum(observed)

X2 <- sum( ( (observed - expected)^2) / expected)

X2## [1] 3.45And to check our calculation, here’s a comparison with the estimate from the chisq.test function:

chisq.test(observed)##

## Pearson's Chi-squared test

##

## data: observed

## X-squared = 3.5, df = 6, p-value = 0.8With in hand, we can estimate , which we expect to be quite low:

sqrt( (X2/sum(observed)) / (min(obs.dim) - 1) )## [1] 0.05874Again, to verify the calculation, here is an alternative estimate using the DescTools package, with a 95% confidence interval:

library(DescTools)

CramerV(observed, conf.level = 0.95)## Cramer V lwr.ci upr.ci

## 0.05874 0.00000 0.08426

Group membership matters

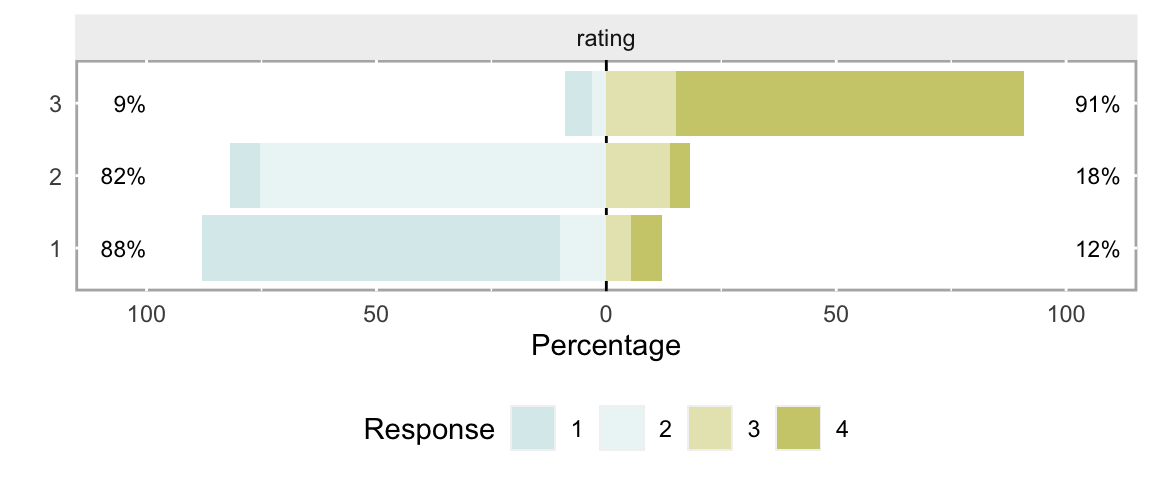

In this second scenario, the distribution of rating is specified directly as a function of group membership. This is an extreme example, designed to elicit a very high value of :

def <- defData(varname = "grp",

formula = "0.3; 0.5; 0.2", dist = "categorical")

defc <- defCondition(condition = "grp == 1",

formula = "0.75; 0.15; 0.05; 0.05", dist = "categorical")

defc <- defCondition(defc, condition = "grp == 2",

formula = "0.05; 0.75; 0.15; 0.05", dist = "categorical")

defc <- defCondition(defc, condition = "grp == 3",

formula = "0.05; 0.05; 0.15; 0.75", dist = "categorical")

# generate the data

dgrp <- genData(500, def)

dgrp <- addCondition(defc, dgrp, "rating")It is readily apparent that the structure of the data is highly dependent on the group:

And, as expected, the estimated is quite high:

observed <- dgrp[, table(grp, rating)]

CramerV(observed, conf.level = 0.95)## Cramer V lwr.ci upr.ci

## 0.7400 0.6744 0.7987

Interpretation of Cramér’s V using proportional odds

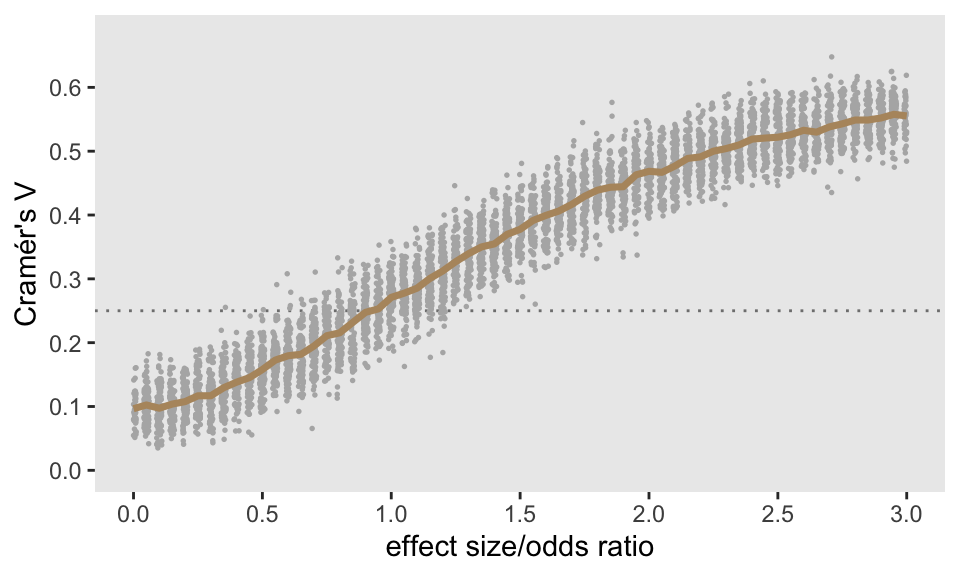

A key question is how we should interpret V? Some folks suggest that is very weak and anything over could be considered quite strong. I decided to explore this a bit by seeing how various cumulative odds ratios relate to estimated values of .

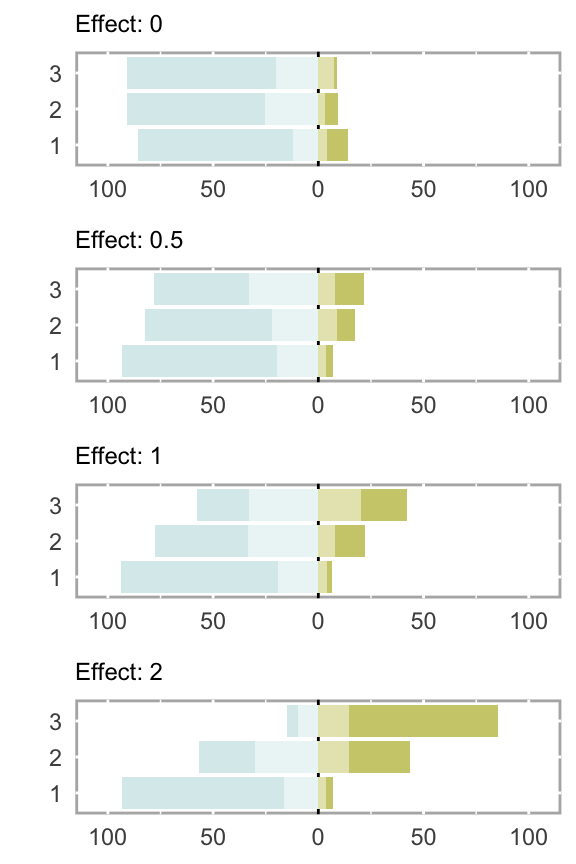

To give a sense of what some log odds ratios (LORs) look like, I have plotted distributions generated from cumulative proportional odds models, using LORs ranging from 0 to 2. At 0.5, there is slight separation between the groups, and by the time we reach 1.0, the differences are considerably more apparent:

My goal was to see how estimated values of change with the underlying LORs. I generated 100 data sets for each LOR ranging from 0 to 3 (increasing by increments of 0.05) and estimated for each data set (of which there were 6100). The plot below shows the mean estimate (in yellow) at each LOR, with the individual estimates represented by the grey points. I’ll let you draw you own conclusions, but (in this scenario at least), it does appear that 0.25 (the dotted horizontal line) signifies a pretty strong relationship, as LORs larger than 1.0 generally have estimates of that exceed this threshold.

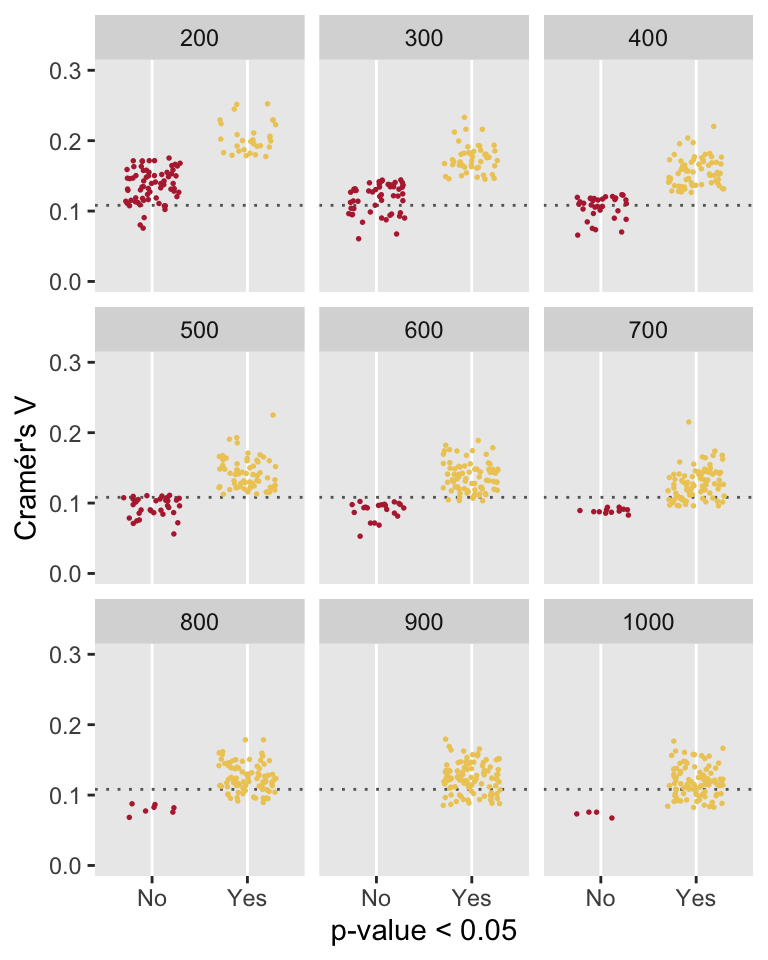

p-values and Cramér’s V

To end, I am just going to circle back to where I started at the beginning of the previous post, thinking about p-values and effect sizes. Here, I’ve generated data sets with a relatively small between-group difference, using a modest LOR of 0.40 that translates to a measure of association just over 0.10. I varied the sample size from 200 to 1000. For each data set, I estimated and recorded whether or not the p-value from a chi-square test would have been deemed “significant” (i.e. p-value ) or not. The key point here is that as the sample size increases and we rely solely on the chi-squared test, we are increasingly likely to attach importance to the findings even though the measure of association is quite small. However, if we actually consider a measure of association like Cramér’s (or some other measure that you might prefer) in drawing our conclusions, we are less likely to get over-excited about a result when perhaps we shouldn’t.

I should also comment that at smaller sample sizes, we will probably over-estimate the measure of association. Here, it would be important to consider some measure of uncertainty, like a 95% confidence interval, to accompany the point estimate. Otherwise, as in the case of larger sample sizes, we would run the risk of declaring success or finding a difference when it may not be warranted.

Addendum: Why is Cramér’s V 1?

Cramér’s , which cannot be lower than 0. when , which will only happen when the observed cell counts for all cells equal the expected cell counts for all cells. In other words, only when there is complete independence.

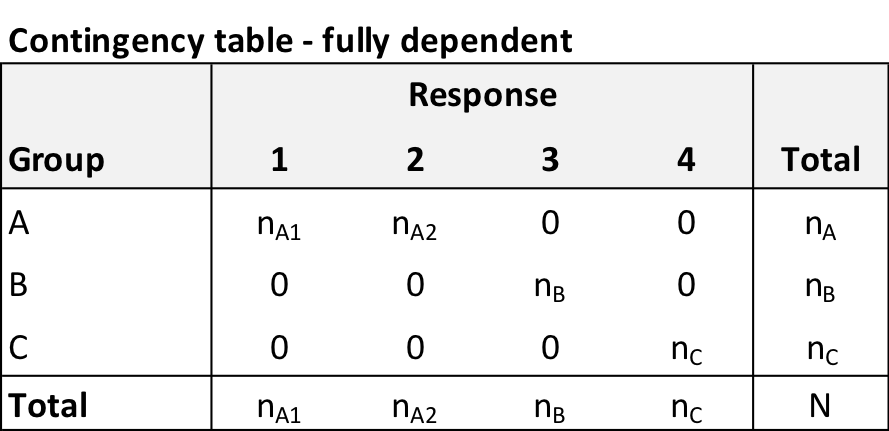

It is also the case that cannot exceed . I will provide some intuition for this using a relatively simple example and some algebra. Consider the following contingency table which represents complete separation of the three groups:

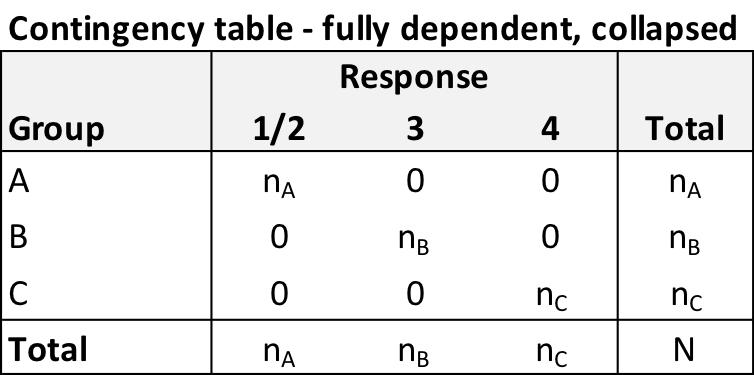

I would argue that this initial table is equivalent to the following table that collapses responses and - no information about the dependence has been lost or distorted. In this case .

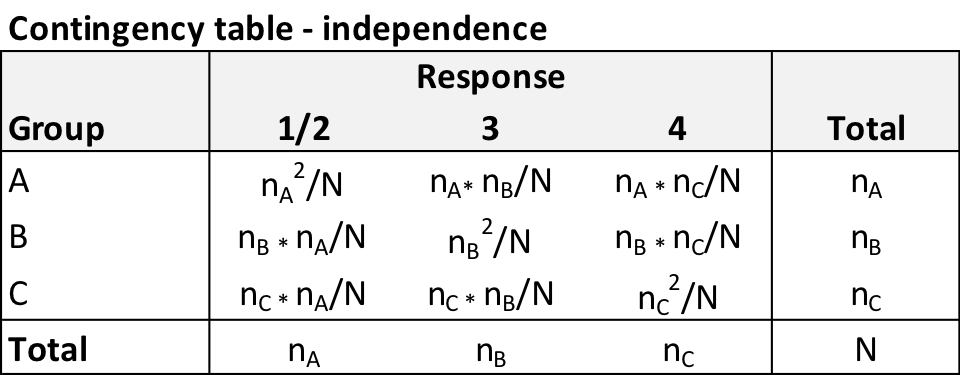

In order to calculate , we need to derive the expected values based on this collapsed contingency table. If is the probability for cell row and column , and and are the row and column totals, respectively then independence implies that . In this example, under independence, the expected cell count for cell is :

If we consider the contribution of group to , we start with the and end up with :

If we repeat this on rows 2 and 3 of the table, we will find that , and , so

And

So, under this scenario of extreme separation between groups,

where .